The post Deploy Contract With Custom Metadata Hash appeared first on Justin Silver.

]]>When you deploy a Solidity contract the compile has removed the comments from the bytecode, but the last 32 bytes of the bytecode is actually a hash of:

- hashed bytecode

- compiler settings

- contract source including comments

When you verify a contract on EtherScan, or SonicScan, this hash is also checked to make sure the verified contract has the correct license, etc. even if the bytecode itself matches. So… can we deploy a contract with a custom hash that will let us verify contracts with customize comments?

This contract will take a custom metadata hash and update the bytecode of our contract before deploying a new instance of it.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

import {YourContract} from './YourContract.sol';

contract CustomFactory {

event ContractDeployed(address indexed contractAddress, bytes32 metadataHash);

error BytecodeTooShort();

/**

* @notice Deploys a new contract with a modified metadata hash.

* @param newMetadataHash The new metadata hash to append to the bytecode.

*/

function deployWithCustomMetadata(bytes32 newMetadataHash) external returns (address) {

// Get the creation code of the contract

bytes memory bytecode = abi.encodePacked(type(YourContract).creationCode);

require(bytecode.length > 32, BytecodeTooShort());

// Replace the last 32 bytes of the bytecode with the new metadata hash

for (uint256 i = 0; i < 32; i++) {

bytecode[bytecode.length - 32 + i] = newMetadataHash[i];

}

// Deploy the contract with the modified bytecode

address deployedContract;

assembly {

deployedContract := create(0, add(bytecode, 0x20), mload(bytecode))

if iszero(deployedContract) {

// if there wsa an error, revert and provide the bytecode

revert(add(0x20, bytecode), mload(bytecode))

}

}

emit ContractDeployed(deployedContract, newMetadataHash);

return deployedContract;

}

}

This is a sample contract with a comment /* YourContract */ we can use to replace with our custom comment.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

/* YourContract */

contract YourContract {

/**

* @notice Constructor for the contract.

*/

constructor() {}

/**

* @notice Returns the stored message.

*/

function getMessage() external view returns (string memory) {

return 'hello world';

}

}

This is a Hardhat test that loads necessary information from build info and artifacts provided by Hardhat, but you can also create this manually.

// Hardhat test for CustomFactory and YourContract

import * as fs from 'fs/promises';

import * as path from 'path';

import { expect } from 'chai';

import { ethers } from 'hardhat';

import { encode as cborEncode } from 'cbor';

import { loadFixture } from '@nomicfoundation/hardhat-network-helpers';

import { AddressLike, ContractTransactionResponse } from 'ethers';

import { YourContract } from 'sdk/types';

function calculateMetadataHash(sourceCode: string, comment: string, originalMetadata: any): string {

const keccak256 = ethers.keccak256;

const toUtf8Bytes = ethers.toUtf8Bytes;

// Inject the comment

const modifiedSourceCode = sourceCode.replace(/\/\* YourContract \*\//gs, comment);

// console.log('modifiedSourceCode', modifiedSourceCode);

// Calculate keccak256 of the modified source code

const sourceCodeHash = keccak256(toUtf8Bytes(modifiedSourceCode));

// Use original metadata as a base

const metadata = {

...originalMetadata,

sources: {

'contracts/YourContract.sol': {

...originalMetadata.sources['contracts/YourContract.sol'],

keccak256: sourceCodeHash,

},

},

};

// CBOR encode the metadata and calculate the hash

const cborData = cborEncode(metadata);

const metadataHash = keccak256(cborData);

return metadataHash;

}

export const fixture = async () => {

const Factory = await ethers.getContractFactory('CustomFactory');

const factory = await Factory.deploy();

await factory.waitForDeployment();

const [owner] = await ethers.getSigners();

return { factory, owner };

};

export async function getDeployedContractAddress(

tx: Promise<ContractTransactionResponse> | ContractTransactionResponse,

): Promise<AddressLike> {

const _tx = tx instanceof Promise ? await tx : tx;

const _receipt = await _tx.wait();

const _interface = new ethers.Interface([

'event ContractDeployed(address indexed contractAddress, bytes32 metadataHash)',

]);

const _data = _receipt?.logs[0].data;

const _topics = _receipt?.logs[0].topics;

const _event = _interface.decodeEventLog('ContractDeployed', _data || ethers.ZeroHash, _topics);

return _event.contractAddress;

}

describe('CustomFactory and YourContract', function () {

it('should deploy a contract with a custom metadata hash', async function () {

const { factory } = await loadFixture(fixture);

// Load source code of YourContract from file

const sourceCodePath = path.join(__dirname, '../contracts/YourContract.sol');

const sourceCode = await fs.readFile(sourceCodePath, 'utf8');

const comment = ('/* My Contract */');

// Load original metadata from build-info

const buildInfoPath = path.join(__dirname, `../artifacts/build-info`);

const buildInfoFiles = await fs.readdir(buildInfoPath);

let buildInfo: any;

for (const file of buildInfoFiles) {

const fullPath = path.join(buildInfoPath, file);

const currentBuildInfo = JSON.parse(await fs.readFile(fullPath, 'utf8'));

if (currentBuildInfo.output.contracts['contracts/YourContract.sol']) {

buildInfo = currentBuildInfo;

break;

}

}

if (!buildInfo) {

throw new Error('Build info for YourContract not found.');

}

const originalMetadata = buildInfo.output.contracts['contracts/YourContract.sol'].YourContract.metadata;

// Calculate the metadata hash

const newMetadataHash = calculateMetadataHash(sourceCode, comment, JSON.parse(originalMetadata));

// Deploy the contract via the Factory

const tx = await factory.deployWithCustomMetadata(newMetadataHash);

const deployedAddress = await getDeployedContractAddress(tx);

// console.log('deployedAddress', deployedAddress);

// Validate the deployment

expect(deployedAddress).to.be.properAddress;

// Interact with the deployed contract

const YourContract = await ethers.getContractFactory('YourContract');

const yourContract = YourContract.attach(deployedAddress) as YourContract;

// Ensure it was initialized properly

const message = await yourContract.message();

expect(message).to.equal('hello world');

// Get the bytecode of the deployed contract

const deployedBytecode = await ethers.provider.getCode(deployedAddress);

// Get the last 32 bytes of the deployed bytecode

const deployedBytecodeHash = deployedBytecode.slice(-64);

// Validate that the hash matches our new metadata hash

expect('0x' + deployedBytecodeHash).to.equal(newMetadataHash);

});

});

The post Deploy Contract With Custom Metadata Hash appeared first on Justin Silver.

]]>The post NGINX Feature Flag Reverse Proxy appeared first on Justin Silver.

]]>Use NGINX as a reverse proxy to different back-end servers based on feature-flags set in the ngx_http_auth_request_module add-on. In my implementation for Secret Party the subdomain is used to determine which event/party a Note that this is a template file and some variables are set from the environment – $DOMAIN, $PROXY_*_HOST, $PROXY_*_PORT, etc.

Upstream Servers

First create the upstream servers that we can proxy the request to. Here we will use “green”, “blue”, and “red”.

# this is the server that handles the "auth" request

upstream upstream_auth {

server $PROXY_AUTH_HOST:$PROXY_AUTH_PORT;

}

# backend app server "green"

upstream upstream_green {

server $PROXY_GREEN_HOST:$PROXY_GREEN_PORT;

}

# backend app server "blue"

upstream upstream_blue {

server $PROXY_BLUE_HOST:$PROXY_BLUE_PORT;

}

# backend app server "red"

upstream upstream_red {

server $PROXY_RED_HOST:$PROXY_RED_PORT;

}

Mappings

Next we create a mapping of route name to upstream server. This will let us choose the backend/upstream server without an evil if.

# map service names from auth header to upstream service

map $wildcard_feature_route $wildcard_service_route {

default upstream_green;

'green' upstream_green;

'blue' upstream_blue;

'red' upstream_red;

}

Optionally we can also support arbitrary response codes in this mapping – note that they will be strings not numbers. This uses the auth response code to choose the route that is used for the proxy from the mapping above – so the HTTP Status Code to string to Upstream Server.

# map http codes from auth response (as string!) to upstream service

map $wildcard_backend_status $wildcard_mapped_route {

default 'green';

'480' 'green';

'481' 'blue';

'482' 'red';

}

Auth Handler

The Auth Handler is where NGINX sends the auth request so we assume we are handling something like http://upstream_auth/feature-flags/$host. This endpoint chooses the route that we use either by setting a header called X-Feature-Route with a string name that matches the mapping above, or can respond with a 4xx error code to also specify a route from the other mapping above. You get the gist.

function handleFeatureFlag(req, res) {

// use the param/header data to choose the backend route

// const hostname = req.params.hostname;

const route = someFlag? 'green' : 'blue';

// this header is used to figure out a proxy route

res.header('X-Feature-Route', route);

return res.status(200).send();

}

function handleFeatureFlag(req, res) {

// this http response code can be used to figure out a proxy route too!

const status = someFlag ? 481 : 482; // blue, red

return res.status(status).send();

}

Server Configuration

To tie it together create a server that uses an auth request to http://upstream_auth/feature-flags/$host. This API endpoint uses the hostname to choose the upstream service to use to fulfill the request, either by setting a header of X-Feature-Route or returning an error code other than 200 or 401 – anything else will be returned as a 500 to NGINX which can then use the string value of this code as a route hint.

server {

listen 80;

# listen on wildcard subdomains

server_name *.$DOMAIN;

# internal feature flags route to upstream_auth

location = /feature-flags {

internal;

# make an api request for the feature flags, pass the hostname

rewrite .* /feature-flags/$host? break;

proxy_pass http://upstream_auth;

proxy_pass_request_body off;

proxy_set_header Content-Length "";

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Original-Remote-Addr $remote_addr;

proxy_set_header X-Original-Host $host;

}

# handle all requests for the wildcard

location / {

# get routing from feature flags

auth_request /feature-flags;

# set Status Code response to variable

auth_request_set $wildcard_backend_status $upstream_status;

# set X-Feature-Route header to variable

auth_request_set $wildcard_feature_route $upstream_http_x_feature_route;

# this is a 401 response

error_page 401 = @process_backend;

# anything not a 200 or 401 returns a 500 error

error_page 500 = @process_backend;

# this is a 200 response

try_files @ @process_request;

}

# handle 500 errors to get the underlying code

location @process_backend {

# set the status code as a string mapped to a service name

set $wildcard_feature_route $wildcard_mapped_route;

# now process the request as normal

try_files @ @process_request;

}

# send the request to the correct backend server

location @process_request {

proxy_read_timeout 10s;

proxy_cache off;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# use the mapping to determine which service to route the request to

proxy_pass http://$wildcard_service_route;

}

}

The post NGINX Feature Flag Reverse Proxy appeared first on Justin Silver.

]]>The post Slither & Echidna + Remappings appeared first on Justin Silver.

]]>While testing a project using hardhat and Echidna I was able to run all tests in the project with echidna-test . but was not able to run tests in a specific contract that imported contracts using NPM and the node_modules directory, such as @openzeppelin. When running echidna-test the following error would be returned

> echidna-test path/to/my/Contract.sol --contract Contract

echidna-test: Couldn't compile given file

stdout:

stderr:

ERROR:CryticCompile:Invalid solc compilation Error: Source "@openzeppelin/contracts/utils/Address.sol" not found: File not found. Searched the following locations: "".

--> path/to/my/Contract.sol:4:1:

|

4 | import {Address} from '@openzeppelin/contracts/utils/Address.sol';

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

To fix this, I added Solc Remappings for Slither and Echidna.

Install Echidna (and Slither)

Make sure that you have Slither and Echidna installed. Follow the install instructions on their site, or on OSX with Homebrew run brew install echidna

Slither Remapping Config

Create a Slither JSON config file – named slither.config.json – to use filter_paths to exclude some directories and provide remappings for node_modules to solc.

{

"filter_paths": "(mocks/|test/|@openzeppelin/)",

"solc_remaps": "@=node_modules/@"

}

Slither will pick up the config file automatically.

slither path/to/my/Contract.sol

For multiple remappings using an array of strings for solc_remaps.

{

"filter_paths": "(mocks/|test/|@openzeppelin/)",

"solc_remaps": [

"@openzeppelin/contracts/=node_modules/@openzeppelin/contracts/",

"@openzeppelin/contracts-upgradeable/=node_modules/@openzeppelin/contracts-upgradeable/"

]

}

Note that if you are using Hardhat or similar for your projects, slither will use it for the compile if a configuration can be found.

slither .

To force a particular compiler, specify it with the command.

slither --compile-force-framework solc ./contracts

Echidna Remapping Config

For Echidna we can create a YAML config file and pass the solc remappings to crytic-compile via cryticArgs.

# provide solc remappings to crytic-compile cryticArgs: ['--solc-remaps', '@=node_modules/@']

When running echidna-test we can use the --config option to specify the YAML config file and pick up our remappings (and other settings).

echidna-test --config echidna.yaml path/to/my/Contract.sol --contract Contract

Bonus Mythril Remapping Config!

{

"remappings": ["@openzeppelin/=node_modules/@openzeppelin/"]

}

myth analyze --solc-json mythril.solc.json path/to/my/Contract.sol

The post Slither & Echidna + Remappings appeared first on Justin Silver.

]]>The post UnsafeMath for Solidity 0.8.0+ appeared first on Justin Silver.

]]>UPDATE: Check out the

@0xdoublesharp/unsafe-mathmodule available on NPM for an easy to use, prepackaged, and tested version of this library!

UnsafeMath is a Solidity library used to perform unchecked, or “unsafe”, math operations. Prior to version 0.8.0 all math was unchecked meaning that subtracting 1 from 0 would underflow and result in the max uint256 value. This behavior led many contracts to use the OpenZeppelin SafeMath library to performed checked math – using the prior example subtracting 1 from 0 would throw an exception as a uint256 is unsigned and therefore cannot be negative. In Solidity 0.8.0+ all math operations became checked, but at a cost of more gas used per operation.

Unchecked Math Library

The UnsafeMath library allows you to perform unchecked math operations where you are confident the result will not be an underflow or an overflow of the uint256 space – saving gas in your contracts where checked math is not needed.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

// solhint-disable func-name-mixedcase

library UnsafeMath {

function unsafe_add(uint256 a, uint256 b) internal pure returns (uint256) {

unchecked {

return a + b;

}

}

function unsafe_sub(uint256 a, uint256 b) internal pure returns (uint256) {

unchecked {

return a - b;

}

}

function unsafe_div(uint256 a, uint256 b) internal pure returns (uint256) {

unchecked {

uint256 result;

// solhint-disable-next-line no-inline-assembly

assembly {

result := div(a, b)

}

return result;

}

}

function unsafe_mul(uint256 a, uint256 b) internal pure returns (uint256) {

unchecked {

return a * b;

}

}

function unsafe_increment(uint256 a) internal pure returns (uint256) {

unchecked {

return ++a;

}

}

function unsafe_decrement(uint256 a) internal pure returns (uint256) {

unchecked {

return --a;

}

}

}

Gas Usage Tests

This test contract uses the UnsafeMath.unsafe_decrement() and Unsafe.unsafe_decrement() functions alongside their checked counterparts to test the difference in gas used between the different methods.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.15;

import './UnsafeMath.sol';

contract TestUnsafeMath {

using UnsafeMath for uint256;

uint256 private _s_foobar;

function safeDecrement(uint256 count) public {

for (uint256 i = count; i > 0; --i) {

_s_foobar = i;

}

}

function safeIncrement(uint256 count) public {

for (uint256 i = 0; i < count; ++i) {

_s_foobar = i;

}

}

function unsafeDecrement(uint256 count) public {

for (uint256 i = count; i > 0; i = i.unsafe_decrement()) {

_s_foobar = i;

}

}

function unsafeIncrement(uint256 count) public {

for (uint256 i = 0; i < count; i = i.unsafe_increment()) {

_s_foobar = i;

}

}

}

Using a simple Mocha setup, our tests will call each of the contract functions with an argument for 100 iterations.

import { ethers } from 'hardhat';

import { ContractFactory } from '@ethersproject/contracts';

import { TestUnsafeMath } from '../sdk/types';

describe('UnsafeMath', () => {

let testUnsafeMathDeploy: ContractFactory, testUnsafeMathContract: TestUnsafeMath;

beforeEach(async () => {

testUnsafeMathDeploy = await ethers.getContractFactory('TestUnsafeMath', {});

testUnsafeMathContract = (await testUnsafeMathDeploy.deploy()) as TestUnsafeMath;

});

describe('Gas Used', async () => {

it('safeDecrement gas used', async () => {

const tx = await testUnsafeMathContract.safeDecrement(100);

// const receipt = await tx.wait();

// console.log(receipt.gasUsed.toString(), 'gasUsed');

});

it('safeIncrement gas used', async () => {

const tx = await testUnsafeMathContract.safeIncrement(100);

// const receipt = await tx.wait();

// console.log(receipt.gasUsed.toString(), 'gasUsed');

});

it('unsafeDecrement gas used', async () => {

const tx = await testUnsafeMathContract.unsafeDecrement(100);

// const receipt = await tx.wait();

// console.log(receipt.gasUsed.toString(), 'gasUsed');

});

it('unsafeIncrement gas used', async () => {

const tx = await testUnsafeMathContract.unsafeIncrement(100);

// const receipt = await tx.wait();

// console.log(receipt.gasUsed.toString(), 'gasUsed');

});

});

});

The results show that a checked incrementing loop used 60276 gas, checked decrementing used 59424 gas, unchecked incrementing used 58117 gas, and unchecked decrementing came in at 57473 gas.

That’s a savings of 2803 gas on a 100 iteration loop, or 4.55% of the total gas used.

UnsafeMath

Gas Used

✓ safeDecrement gas used

✓ safeIncrement gas used

✓ unsafeDecrement gas used

✓ unsafeIncrement gas used

·--------------------------------------|---------------------------|----------------|-----------------------------·

| Solc version: 0.8.15 · Optimizer enabled: true · Runs: 999999 · Block limit: 30000000 gas │

·······································|···························|················|······························

| Methods │

···················|···················|·············|·············|················|···············|··············

| Contract · Method · Min · Max · Avg · # calls · usd (avg) │

···················|···················|·············|·············|················|···············|··············

| TestUnsafeMath · safeDecrement · - · - · 59424 · 1 · - │

···················|···················|·············|·············|················|···············|··············

| TestUnsafeMath · safeIncrement · - · - · 60276 · 1 · - │

···················|···················|·············|·············|················|···············|··············

| TestUnsafeMath · unsafeDecrement · - · - · 57473 · 1 · - │

···················|···················|·············|·············|················|···············|··············

| TestUnsafeMath · unsafeIncrement · - · - · 58117 · 1 · - │

···················|···················|·············|·············|················|···············|··············

| Deployments · · % of limit · │

·······································|·············|·············|················|···············|··············

| TestUnsafeMath · - · - · 188806 · 0.6 % · - │

·--------------------------------------|-------------|-------------|----------------|---------------|-------------·

4 passing (2s)

The post UnsafeMath for Solidity 0.8.0+ appeared first on Justin Silver.

]]>The post NFT – Mint Random Token ID appeared first on Justin Silver.

]]>The perceived value of many NFTs is based on that item’s rarity making it ideal to mint them fairly. Rarity snipers and bad actors on a team can scoop up rare items from a collection in an attempt to further profit on the secondary market. How can you both fairly distribute the tokens – both to the community and the project team?

One solution is to hide the metadata until after reveal and mint the token IDs out of order – either using a provable random number, a pseudo random number, as pseudo random number seeded with a provable random beacon, or other options depend on your security needs. For provable random numbers check out Provable Randomness with VDF.

How It Works

uint16[100] public ids;

uint16 private index;

function _pickRandomUniqueId(uint256 random) private returns (uint256 id) {

uint256 len = ids.length - index++;

require(len > 0, 'no ids left');

uint256 randomIndex = random % len;

id = ids[randomIndex] != 0 ? ids[randomIndex] : randomIndex;

ids[randomIndex] = uint16(ids[len - 1] == 0 ? len - 1 : ids[len - 1]);

ids[len - 1] = 0;

}

We can efficiently track which IDs have – and have not – been minted by starting with an empty and cheap to create array of empty values with a size that matches your total supply. The array size could suffice in lieu of tracking the index, but this is more gas efficient than pop()ing the array. For each round it will select a random index, bounded by the remaining supply – we will call this a “roll” as in “roll of the dice” except we will reduce the number of sides by one for each round.

Round 0

When we start the Data array will match the supply we want to create (five) and be empty (all zeroes), as well our Results, which are just empty (this represents the token ids that would be minted).

Index: 0 1 2 3 4 5 -------------------------- Data: [0, 0, 0, 0, 0, 0] Results: []

Round 1: 3

For the first round, let’s say it’s a 3. We look at the third index, check to see if it is 0, and if is we return the index – this will make more sense in a moment. Next we look at the last position in the array given our remaining supply and if it is a 0 we move that index to the 3 position we rolled.

Index: 0 1 2 *3* 4 5 --------------------------- Data: [0, 0, 0, 0, 0, 0] Results: [] << before after >> Data: [0, 0, 0, 5, 0] Results: [3]

Round 2: 3

In the previous step when we check an index for a value, if a value was set a that index we would use it rather than the index. To demonstrate this, let’s assume we rolled a 3 again. This time we look at this third position and it contains a 5, so we return that instead of a three. This is great, because we already selected a 3 and we want these to be unique. Again we look at the last position, and since it is not set we set the index 4 as the value of index 3.

Index: 0 1 2 *3* 4 5 --------------------------- Data: [0, 0, 0, 5, 0] Results: [3] << before after >> Data: [0, 0, 0, 4] Results: [3, 5]

Round 3: 2

Next, we roll a 2 again. We look at position 2, it’s not set, so we return a 2, again a number we haven’t selected previously. Next we check the last position which now as a 4 set, so it is moved into index 2 as we have yet to select it.

Index: 0 1 *2* 3 4 5 --------------------------- Data: [0, 0, 0, 4] Results: [3, 5] << before after >> Data: [0, 0, 4] Results: [3, 5, 2]

Round 4: 1

We roll a 1, and since the first index contains a 4 we move that to our results.

Index: 0 *1* 2 3 4 5 --------------------------- Data: [0, 0, 4] Results: [3, 5, 2] << before after >> Data: [0, 4] Results: [3, 5, 2, 1]

Round 5: 1

We roll a 1 again. This time we return the 4, but since there is nothing to move into its place, we move on.

Index: 0 *1* 2 3 4 5 --------------------------- Data: [0, 4] Results: [3, 5, 2, 1] << before after >> Data: [0] Results: [3, 5, 2, 1, 4]

Round 6: 0

Lastly, we get a 0, since that’s all that remains. It both contains a 0 and is in that position so we select a 0.

Index: *0* 1 2 3 4 5 --------------------------- Data: [0] Results: [3, 5, 2, 1, 4] << before after >> Data: [] Results: [3, 5, 2, 1, 4, 0]

TLDR;

Each index of the array tracks an unminted ID. If the position isn’t set, that ID hasn’t been minted. If it is set, it’s because the last position was moved to it when the available indexes shrank and the last index wasn’t the one selected so we want to preserve it. If you want to start minting at 1, add 1.

Pseudo Random

Uses a pseudo random number to select from a unique set of token IDs.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

import '@openzeppelin/contracts/token/ERC721/ERC721.sol';

contract RandomTokenIdv1 is ERC721 {

uint16[100] public ids;

uint16 private index;

constructor() ERC721('RandomIdv1', 'RNDMv1') {}

function mint(address[] calldata _to) external {

for (uint256 i = _to.length; i != 0; ) {

unchecked{ --i; }

uint256 _random = uint256(keccak256(abi.encodePacked(index, msg.sender, block.timestamp, blockhash(block.number-1))));

_mint(_to[i], _pickRandomUniqueId(_random));

}

}

function _pickRandomUniqueId(uint256 random) private returns (uint256 id) {

unchecked{ ++index; }

uint256 len = ids.length - index;

require(len != 0, 'no ids left');

uint256 randomIndex = random % len;

id = ids[randomIndex] != 0 ? ids[randomIndex] : randomIndex;

ids[randomIndex] = uint16(ids[len - 1] == 0 ? len - 1 : ids[len - 1]);

ids[len - 1] = 0;

}

}

Provable Random

Uses a provable random number and derivatives to select from a unique set of token IDs.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

import '@openzeppelin/contracts/token/ERC721/ERC721.sol';

import './libraries/Randomness.sol';

import './libraries/SlothVDF.sol';

contract RandomTokenIdv2 is ERC721 {

using Randomness for Randomness.RNG;

Randomness.RNG private _rng;

mapping(address => uint256) public seeds;

uint256 public prime = 432211379112113246928842014508850435796007;

uint256 public iterations = 1000;

uint16[100] public ids;

uint16 private index;

constructor() ERC721('RandomIdv2', 'RNDMv2') {}

function createSeed() external payable {

seeds[msg.sender] = _rng.getRandom();

}

function mint(address[] calldata _to, uint256 proof) external {

require(SlothVDF.verify(proof, seeds[msg.sender], prime, iterations), 'Invalid proof');

uint256 _randomness = proof;

uint256 _random;

for (uint256 i = _to.length; i != 0; ) {

unchecked{ --i; }

(_randomness, _random) = _rng.getRandom(_randomness);

_mint(_to[i], _pickRandomUniqueId(_random));

}

}

function _pickRandomUniqueId(uint256 random) private returns (uint256 id) {

unchecked{ ++index; }

uint256 len = ids.length - index;

require(len != 0, 'no ids left');

uint256 randomIndex = random % len;

id = ids[randomIndex] != 0 ? ids[randomIndex] : randomIndex;

ids[randomIndex] = uint16(ids[len - 1] == 0 ? len - 1 : ids[len - 1]);

ids[len - 1] = 0;

}

}

Random Beacon

Uses a provable random number as a beacon which is used as the seed for a pseudo random number and derivatives to select from a unique set of token IDs.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

import '@openzeppelin/contracts/access/Ownable.sol';

import '@openzeppelin/contracts/token/ERC721/ERC721.sol';

import './libraries/Randomness.sol';

import './libraries/SlothVDF.sol';

contract RandomTokenIdv3 is ERC721, Ownable {

using Randomness for Randomness.RNG;

Randomness.RNG private _rng;

uint16[100] public ids;

uint16 private index;

uint256 public prime = 432211379112113246928842014508850435796007;

uint256 public iterations = 1000;

uint256 public seed;

uint256 public beacon;

constructor() ERC721('RandomTokenIdv3', 'RNDMv3') {}

// create a set - use something interesting for the input.

function createSeed() external onlyOwner {

(uint256, uint256 _random) = _rng.getRandom();

seed = _random;

}

// once calclated set the beacon

function setBeacon(uint256 proof) external {

require(SlothVDF.verify(proof, seeds[msg.sender], prime, iterations), 'Invalid proof');

beacon = proof;

}

function mint(address[] calldata _to) external {

require(beacon != 0, 'Beacon not set');

uint256 _randomness = beacon;

uint256 _random;

for (uint256 i = _to.length; i != 0; ) {

unchecked{ --i; }

(_randomness, _random) = _rng.getRandom(_randomness);

_mint(_to[i], _pickRandomUniqueId(_random));

}

}

function _pickRandomUniqueId(uint256 random) private returns (uint256 id) {

unchecked{ ++index; }

uint256 len = ids.length - index;

require(len != 0, 'no ids left');

uint256 randomIndex = random % len;

id = ids[randomIndex] != 0 ? ids[randomIndex] : randomIndex;

ids[randomIndex] = uint16(ids[len - 1] == 0 ? len - 1 : ids[len - 1]);

ids[len - 1] = 0;

}

}

The post NFT – Mint Random Token ID appeared first on Justin Silver.

]]>The post Provable Randomness with VDF appeared first on Justin Silver.

]]>A Verifiable Delay Function (VDF) is a linearly computed function that takes a relatively long time to calculate, however the resulting proof can be verified to be the result of this computation in a much shorter period of time. Since the computation can’t be sped up through parallelization or other tricks we can be sure that for a given seed the resulting value can’t be known ahead of time – thus making it a provable random number.

We can apply this to a blockchain to achieve provable randomness without an oracle by having the client compute the VDF. This process takes two transactions – the first to commit to the process and generate a seed for the VDF input, and the second to submit the calculated proof. If the length of time to calculate the VDF proof exceeds the block finality for the chain you are using, then the result of the second transaction can’t be known when the seed is generated and can thus be used as a provable random number. For more secure applications you can use multiple threads to calculate multiple VDF proofs concurrently, or for less strict requirements you can bitshift the value to get “new” random numbers.

Provable Random Numbers

The good stuff first – provable random numbers without an oracle. The user first makes a request to createSeed() typically with a commitment such as payment. This seed value along with the large prime and number of iterations are used to calculate the VDF proof – the larger the prime and the higher the iterations, the longer the proof takes to calculate and can be adjusted as needed. As long as the number of iterations takes longer to compute than the block finality we know it’s random since it’s not possible to know the result before it’s too late to change it. A blockchain like Fantom is ideal for this application with block times of ~1 second and finality after one block – validators cannot reorder blocks once the are minted.

This proof is then passed in to the prove() function. It uses the previously created seed – which now can’t be changed – and other inputs to verify the proof. If it passes, the value can be used as a random number, or can be passed into another function (as below) to create multiple random numbers by shifting the bits on each request for a random(ish) number.

Smart Contract

You can find large primes for your needs using https://bigprimes.org/, potentially even rotating them. Note that the code below is an example and should not be used directly without modifying for your needs.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

import './libraries/SlothVDF.sol';

contract RandomVDFv1 {

// large prime

uint256 public prime = 432211379112113246928842014508850435796007;

// adjust for block finality

uint256 public iterations = 1000;

// increment nonce to increase entropy

uint256 private nonce;

// address -> vdf seed

mapping(address => uint256) public seeds;

function createSeed() external payable {

// commit funds/tokens/etc here

// create a pseudo random seed as the input

seeds[msg.sender] = uint256(keccak256(abi.encodePacked(msg.sender, nonce++, block.timestamp, blockhash(block.number - 1))));

}

function prove(uint256 proof) external {

// see if the proof is valid for the seed associated with the address

require(SlothVDF.verify(proof, seeds[msg.sender], prime, iterations), 'Invalid proof');

// use the proof as a provable random number

uint256 _random = proof;

}

}

Hardhat Example

This code is an example Hardhat script for calling the RandomVDFv1 contract. It shows the delay to calculate a proof and attempts to submit it. In a real implementation this could be an NFT mint, etc.

import { ethers, deployments } from 'hardhat';

import { RandomVDFv1 } from '../sdk/types';

import sloth from './slothVDF';

async function main() {

// We get the signer

const [signer] = await ethers.getSigners();

// get the contracts

const deploy = await deployments.get('RandomVDFv1');

const token = (await ethers.getContractAt('RandomVDFv1', deploy.address, signer)) as RandomVDFv1;

// the prime and iterations from the contract

const prime = BigInt((await token.prime()).toString());

const iterations = BigInt((await token.iterations()).toNumber());

console.log('prime', prime.toString());

console.log('iterations', iterations.toString());

// create a new seed

const tx = await token.createSeed();

await tx.wait();

// get the seed

const seed = BigInt((await token.seeds(signer.address)).toString());

console.log('seed', seed.toString());

// compute the proof

const start = Date.now();

const proof = sloth.computeBeacon(seed, prime, iterations);

console.log('compute time', Date.now() - start, 'ms', 'vdf proof', proof);

// this could be a mint function, etc

const proofTx = await token.prove(proof);

await proofTx.wait();

}

main().catch((error) => {

console.error(error);

process.exit(1);

});

Sloth Verifiable Delay

This off-chain implementation of Sloth VDF in Typescript will let us compute the proof on the client.

const bexmod = (base: bigint, exponent: bigint, modulus: bigint) => {

let result = 1n;

for (; exponent > 0n; exponent >>= 1n) {

if (exponent & 1n) {

result = (result * base) % modulus;

}

base = (base * base) % modulus;

}

return result;

};

const sloth = {

compute(seed: bigint, prime: bigint, iterations: bigint) {

const exponent = (prime + 1n) >> 2n;

let beacon = seed % prime;

for (let i = 0n; i < iterations; ++i) {

beacon = bexmod(beacon, exponent, prime);

}

return beacon;

},

verify(beacon: bigint, seed: bigint, prime: bigint, iterations: bigint) {

for (let i = 0n; i < iterations; ++i) {

beacon = (beacon * beacon) % prime;

}

seed %= prime;

if (seed == beacon) return true;

if (prime - seed === beacon) return true;

return false;

},

};

export default sloth;

Next we need to be able to verify the Sloth VDF proof on chain which is easy using the following library.

// SPDX-License-Identifier: MIT

// https://eprint.iacr.org/2015/366.pdf

pragma solidity ^0.8.11;

library SlothVDF {

/// @dev pow(base, exponent, modulus)

/// @param base base

/// @param exponent exponent

/// @param modulus modulus

function bexmod(

uint256 base,

uint256 exponent,

uint256 modulus

) internal pure returns (uint256) {

uint256 _result = 1;

uint256 _base = base;

for (; exponent > 0; exponent >>= 1) {

if (exponent & 1 == 1) {

_result = mulmod(_result, _base, modulus);

}

_base = mulmod(_base, _base, modulus);

}

return _result;

}

/// @dev compute sloth starting from seed, over prime, for iterations

/// @param _seed seed

/// @param _prime prime

/// @param _iterations number of iterations

/// @return sloth result

function compute(

uint256 _seed,

uint256 _prime,

uint256 _iterations

) internal pure returns (uint256) {

uint256 _exponent = (_prime + 1) >> 2;

_seed %= _prime;

for (uint256 i; i < _iterations; ++i) {

_seed = bexmod(_seed, _exponent, _prime);

}

return _seed;

}

/// @dev verify sloth result proof, starting from seed, over prime, for iterations

/// @param _proof result

/// @param _seed seed

/// @param _prime prime

/// @param _iterations number of iterations

/// @return true if y is a quadratic residue modulo p

function verify(

uint256 _proof,

uint256 _seed,

uint256 _prime,

uint256 _iterations

) internal pure returns (bool) {

for (uint256 i; i < _iterations; ++i) {

_proof = mulmod(_proof, _proof, _prime);

}

_seed %= _prime;

if (_seed == _proof) return true;

if (_prime - _seed == _proof) return true;

return false;

}

}

Randomness Library

Instead of using the proof directly as a single random number we can use it as the input to a random number generator for multiple provable random numbers. If we want to save a bit more gas instead of calling for a new number every time we can just shift the bits of the random number to the right and refill it when it empties. This pattern is more efficient if implemented directly your contract, but this library can be reused if you can support the relaxed security.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

library Randomness {

// memory struct for rand

struct RNG {

uint256 seed;

uint256 nonce;

}

/// @dev get a random number

function getRandom(RNG storage _rng) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, 0, 2**256 - 1, _rng.seed);

}

/// @dev get a random number

function getRandom(RNG storage _rng, uint256 _randomness) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, _randomness, 2**256 - 1, _rng.seed);

}

/// @dev get a random number passing in a custom seed

function getRandom(

RNG storage _rng,

uint256 _randomness,

uint256 _seed

) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, _randomness, 2**256 - 1, _seed);

}

/// @dev get a random number in range (0, _max)

function getRandomRange(

RNG storage _rng,

uint256 _max

) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, 0, _max, _rng.seed);

}

/// @dev get a random number in range (0, _max)

function getRandomRange(

RNG storage _rng,

uint256 _randomness,

uint256 _max

) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, _randomness, _max, _rng.seed);

}

/// @dev get a random number in range (0, _max) passing in a custom seed

function getRandomRange(

RNG storage _rng,

uint256 _randomness,

uint256 _max,

uint256 _seed

) external returns (uint256 randomness, uint256 random) {

return _getRandom(_rng, _randomness, _max, _seed);

}

/// @dev fullfill a random number request for the given inputs, incrementing the nonce, and returning the random number

function _getRandom(

RNG storage _rng,

uint256 _randomness,

uint256 _max,

uint256 _seed

) internal returns (uint256 randomness, uint256 random) {

// if the randomness is zero, we need to fill it

if (_randomness <= 0) {

// increment the nonce in case we roll over

_randomness = uint256(

keccak256(

abi.encodePacked(_seed, _rng.nonce++, block.timestamp, msg.sender, blockhash(block.number - 1))

)

);

}

// mod to the requested range

random = _randomness % _max;

// shift bits to the right to get a new random number

randomness = _randomness >>= 4;

}

}

This example uses the Randomness library to generate multiple random numbers from a single proof in an efficient way. Note that this is a less secure application, though still valid for many use cases.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.11;

import './libraries/Randomness.sol';

import './libraries/SlothVDF.sol';

contract RandomVDFv2 {

using Randomness for Randomness.RNG;

Randomness.RNG private _rng;

// large prime

uint256 public prime = 432211379112113246928842014508850435796007;

// adjust for block finality

uint256 public iterations = 1000;

// increment nonce to increase entropy

uint256 private nonce;

// address -> vdf seed

mapping(address => uint256) public seeds;

// commit funds/tokens/etc here

function createSeed() external payable {

// create a pseudo random seed as the input

seeds[msg.sender] = Randomness.RNG(0, nonce++).getRandom();

}

function prove(uint256 proof) external {

// see if the proof is valid for the seed associated with the address

require(SlothVDF.verify(proof, seeds[msg.sender], prime, iterations), 'Invalid proof');

uint256 _randomness;

uint256 _random;

(_randomness, _random) = _rng.getRandom(_randomness, proof);

(_randomness, _random) = _rng.getRandom(_randomness, proof);

(_randomness, _random) = _rng.getRandom(_randomness, proof);

}

}

The post Provable Randomness with VDF appeared first on Justin Silver.

]]>The post Fantom Lachesis Full Node RPC appeared first on Justin Silver.

]]>Create an Alpine Linux image to run the lachesis node for the Fantom cryptocurrency.

FROM alpine:latest as build-stage

ARG LACHESIS_VERSION=release/1.0.0-rc.0

ENV GOROOT=/usr/lib/go

ENV GOPATH=/go

ENV PATH=$GOROOT/bin:$GOPATH/bin:/build:$PATH

RUN set -xe; \

apk add --no-cache --virtual .build-deps \

# get the build dependencies for go

git make musl-dev go linux-headers; \

# install fantom lachesis from github

mkdir -p ${GOPATH}; cd ${GOPATH}; \

git clone --single-branch --branch ${LACHESIS_VERSION} https://github.com/Fantom-foundation/go-lachesis.git; \

cd go-lachesis; \

make build -j$(nproc); \

mv build/lachesis /usr/local/bin; \

rm -rf /go; \

# remove our build dependencies

apk del .build-deps;

FROM alpine:latest as lachesis

# copy the binary

COPY --from=build-stage /usr/local/bin/lachesis /usr/local/bin/lachesis

COPY run.sh /usr/local/bin

WORKDIR /root

ENV LACHESIS_PORT=5050

ENV LACHESIS_HTTP=18545

ENV LACHESIS_API=eth,ftm,debug,admin,web3,personal,net,txpool

ENV LACHESIS_VERBOSITY=2

EXPOSE ${LACHESIS_PORT}

EXPOSE ${LACHESIS_HTTP}

VOLUME [ "/root/.lachesis" ]

CMD ["run.sh"]

The run.sh just starts the nodes with the ports you set in the environment.

#!/usr/bin/env sh

set -xe

lachesis \

--port ${LACHESIS_PORT} \

--http \

--http.addr "0.0.0.0" \

--http.port ${LACHESIS_HTTP} \

--http.api "${LACHESIS_API}" \

--nousb \

--verbosity ${LACHESIS_VERBOSITY}

Use docker-compose to define the TCP/UDP ports to expose as well as a data volume to persist the blockchain data.

version: '3.4'

services:

lachesis:

image: doublesharp/fantom-lachesis:latest

restart: always

ports:

- '5050:5050'

- '5050:5050/udp'

- '18545:18545'

volumes:

- lachesis:/root/.lachesis

environment:

LACHESIS_VERBOSITY: 2

volumes:

lachesis: {}

The post Fantom Lachesis Full Node RPC appeared first on Justin Silver.

]]>The post Alpine Linux PHP + iconv fix appeared first on Justin Silver.

]]>To use PHP with iconv on Alpine Linux – in a Docker container for example – you need to use the preloadable iconv library, which was previously provided with the gnu-libiconv package, but was removed after Alpine v3.13. After recently rebuilding an Alpine image and running a PHP script that required iconv, I saw the following error:

Notice: iconv(): Wrong charset, conversion from `UTF-8' to `UTF-8//IGNORE' is not allowed

To work around it I installed the gnu-libiconv package from the v3.13 repo. For my projects I went ahead and exported the preloadable binary once it was built as well so that I could just COPY it into the image instead of building it – in my case it’s only for Alpine after all.

You can do this by using an Alpine image tag of alpine:3.13 to add gnu-libiconv and compile /usr/lib/preloadable_libiconv.so, then copy it to a volume to save the binary once the container exits – the output folder is called ./out in this example.

% docker run -v $(pwd)/out:/out -it alpine:3.13 \

/bin/sh -c 'apk add --no-cache gnu-libiconv && cp -f /usr/lib/preloadable_libiconv.so /out/preloadable_libiconv.so'

fetch https://dl-cdn.alpinelinux.org/alpine/v3.13/main/x86_64/APKINDEX.tar.gz

fetch https://dl-cdn.alpinelinux.org/alpine/v3.13/community/x86_64/APKINDEX.tar.gz

(1/1) Installing gnu-libiconv (1.15-r3)

Executing busybox-1.32.1-r6.trigger

OK: 8 MiB in 15 packages

% ls -la out/preloadable_libiconv.so

-rw-r--r-- 1 justin staff 1005216 Apr 23 14:32 out/preloadable_libiconv.so

Once you have the prebuilt binary you can use COPY in your Dockerfile to use it without needing to build it.

# copy preloadable_libiconv.so from prebuilt COPY /rootfs/usr/lib/preloadable_libiconv.so /usr/lib/preloadable_libiconv.so ENV LD_PRELOAD /usr/lib/preloadable_libiconv.so php

If you prefer to install the older package that includes the preloadable binary in a different Alpine Dockerfile you can specify an older repository in a RUN command, like so:

FROM wordpress:5.7.1-php7.4-fpm-alpine

# ... some config

RUN apk add --no-cache \

--repository http://dl-cdn.alpinelinux.org/alpine/v3.13/community/ \

--allow-untrusted \

gnu-libiconv

ENV LD_PRELOAD /usr/lib/preloadable_libiconv.so php

The post Alpine Linux PHP + iconv fix appeared first on Justin Silver.

]]>The post Autodesk Fusion 360 Using eGPU appeared first on Justin Silver.

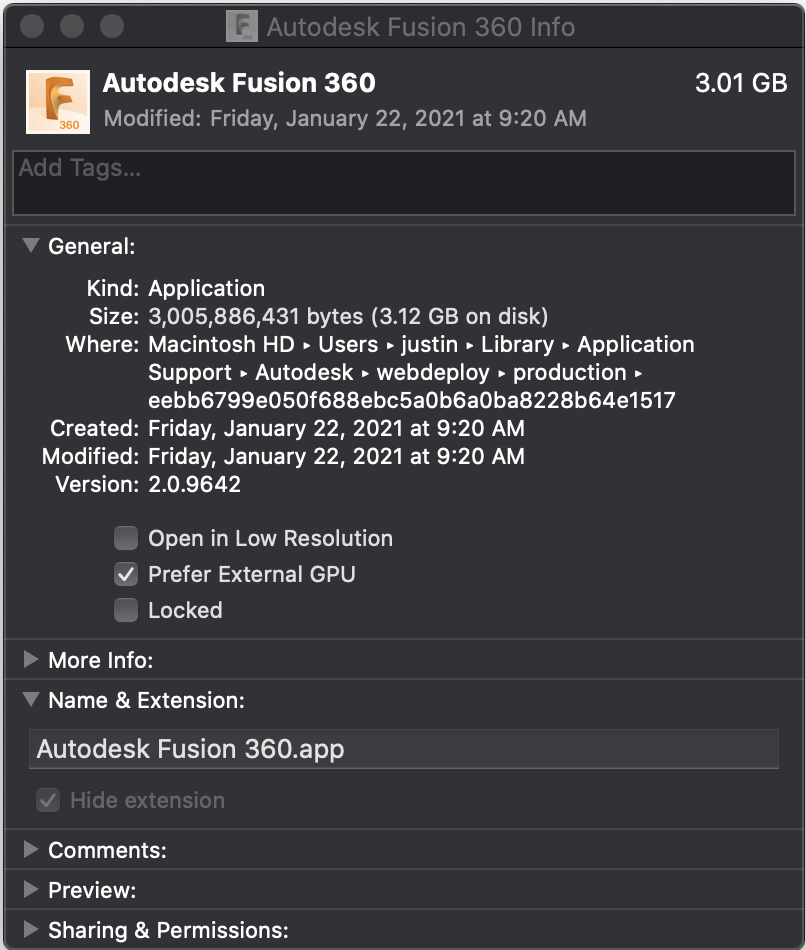

]]>Open a Finder window showing the latest version of Fusion 360 in the webdeploy folder.

F360_APP=Autodesk\ Fusion\ 360 F360_WEBDEPLOY="~/Applications/$F360_APP.app/Contents/MacOS/$F360_APP" F360_DIR=$(cat ~/Applications/"$F360_APP".app/Contents/MacOS/"$F360_APP" | grep destfolder | head -n 1 | cut -c 12- | tr -d '"') open -R "$F360_DIR/$F360_APP.app"

Press ⌘ + i to open the Finder Inspector and check “Prefer External GPU”.

The next time you launch Fusion 360 it should use the external GPU instead of the internal options.

The post Autodesk Fusion 360 Using eGPU appeared first on Justin Silver.

]]>The post Chocolate Chip Cookies appeared first on Justin Silver.

]]>Ingredients

- 300g 00 strong flour (substitute AP or half AP and half 00. ~2 3/8 cups)

- 3/4tsp baking soda

- 225g room temperature butter (2 sticks)

- 100g granulated sugar (~1/2 cups)

- 200g brown sugar (~1 cup)

- 5tsp vanilla paste (or extract)

- 1tsp kosher salt

- 2 eggs

- ~300g semi sweet chocolate wafers (~1.5 cups+)

- Smoked sea salt flakes (for topping)

Steps

- Mix butter in stand mixer with flat beater until smooth

- The butter needs to be room temperature, leave it out overnight. This step is important.

- Add sugar and mix on high until smooth, scrape sides a few times to make sure all is incorporated.

- Add salt, vanilla, eggs and mix on high, scrape sides again.

- Mix flour and baking soda in a bowl with a whisk.

- Mix in flour to butter cream in 3 parts until barely incorporated each time.

- Don’t quite finish mixing in the last part. You want to avoid gluten development.

- Mix in chocolate until just combined, this will incorporate the last of the flour too.

- Put dough container/silicone bag/pan with lid and chill for 24-48 hours.

- Letting the dough rest lets enzymes work on the dough that makes it more flavorful. You can skip it if you are impatient.

- Preheat oven to 350F.

- Weigh out into 50g+ pieces, roll in your hands to make a ball making sure there is dough on the bottom of each cookie.

- Place onto silicone baking mat or parchment paper evenly spaced.

- Sprinkle smoked salt flakes on top of each cookie ball, excess will end up on the bottom of the cookie.

- Cook for 10-12 mins, remove from the oven and drop the pan on the counter to flatten.

- Dropping the pan collapses the cookie before they are finished cooking with gives them a chewier consistency once they cool.

- Cook for another 1-2 mins until edges just brown.

- Remove from the oven and pan and put on a rack to cool completely.

The post Chocolate Chip Cookies appeared first on Justin Silver.

]]>